In the ever-connected, digital world of today, we have seen an explosion in the amount of data created by billions of stakeholders from around the world. This exponential growth in the scale and volume of this data has allowed us to extract valuable information and insights from this data.

However, the existence of such large amounts of individual data has given rise to a new set of challenges, particularly regarding privacy concerns. For example, a study conducted by Latanya Sweeney in 1997 found that 87% of the United States population could be uniquely identified using three (publicly available) features – their age, date of birth, and ZIP code [1,2]. The presence of large amounts of such identifying data makes it significantly easier for any (potentially malicious) actors to carry out linkage attacks or other de-identification attacks [3].

In this article, we explore a method to generate differentially private synthetic data for a voting dataset, using features like age, education level, and sex. Synthetic data emerges as a promising approach to overcome the challenges mentioned earlier. By generating artificial data that preserves the statistical properties of real data while ensuring the anonymity of individuals, synthetic data offers a way to unlock the value of data without compromising privacy. By using differentially private mechanisms, we can also ensure that our data is generated by following a mathematical guarantee of being private, and so no identifying information about the individuals in the original data is released. Well-generated synthetic data aims to preserve relationships between attributes of the original data, allowing it to be used for analysis and to be publicly released without the risk of privacy loss. We examine generating synthetic data using a “classical” stratified distribution-based approach.

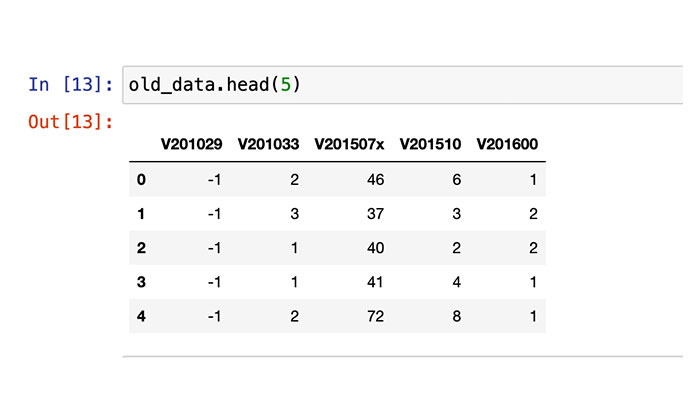

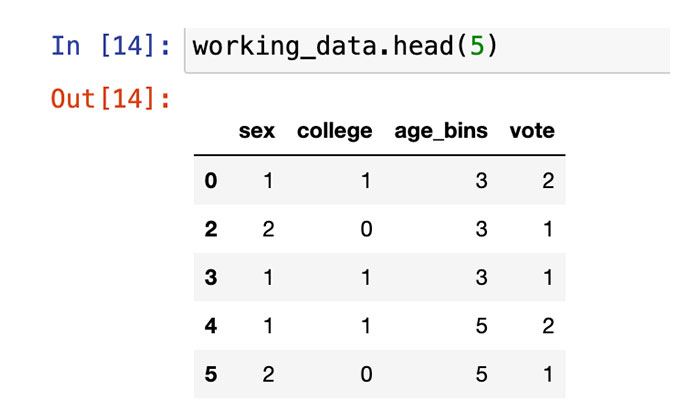

We work on data collected by the American National Election Studies, which surveyed over 8,000 people on their choices of candidates in the 2020 United States presidential election [4]. From the raw data, we extracted features like age, education status, and sex, along with voting intention, and simplified and transformed them into categorical values.

(The raw data, before and after pre-processing)

Our three features – sex, college, age_bins – all have significant predictive potential. After our processing, sex is a binary variable, with value 1 indicating male and 2 indicating female. We simplify a range of education levels into a binary variable called ‘college’, where 1 signifies that the voter has a college degree. Lastly, we binned age into six groupings, starting from 18-25 as the first bin, up to 80+. The target variable, vote, is a binary variable with two possible values: 1 indicates a vote for the Democratic Party and 2 indicates a vote for the Republican Party.

Following this, we then consider all our columns together, creating joint distributions of our n features across all possible combinations (n here refers to the total number of features). For each distribution, we then find the total count of votes received by each candidate. For example, for college-educated female voters with ages between 18–25 (a case we will later revisit), we find the number of votes each candidate receives: among the 98 voters in this bracket, we find that the Democratic candidate received 73 votes and the Republican candidate received 25 votes.

Before we proceed, it might be helpful to go over the mathematical definition of differential privacy, and its corresponding guarantee. According to the definition, a randomised mechanism is ε-differentially private, if:

P [ M(Dn) = S] / P [ M(Dn+1) = S] < eε,

for all possible outputs S, and any two neighbouring datasets Dn and Dn+1.

Here, S refers to a specific output of the mechanism M when applied on either dataset, and Dn and Dn+1 are neighbouring datasets, i.e, two possible datasets where Dn+1 has one record more or less than Dn. ε is a positive parameter which quantifies our privacy guarantee; here, the value of ε determines the trade-off between privacy and utility. Smaller values of ε imply higher levels of privacy at the cost of accuracy, while larger values of ε have more accuracy at the cost of lowered privacy protection.

A popular approach to ensure differential privacy is the Laplace mechanism. In this, we add noise to our query results directly proportional to the sensitivity of the query (denoted by s) and inversely proportional to the privacy parameter ε, as shown below. The sensitivity of a query is the maximum difference between the output of the same query on neighbouring datasets. Since we assume that only our target variable – the voting preference – is sensitive, we add noise to it following the below operation:

M(D) = f(D) + Lap(s/ε)

Now that we have the counts of the votes of each candidate, we can follow the above mechanism to ensure differential privacy. The queries we work with here are counting queries, i.e., where we count the number of rows in a dataset fulfilling some property being queried for. We can see that these counting queries have a sensitivity of 1 (as the maximum difference in the output of any counting query on any two neighbouring datasets will be 1). This allows us to add Laplace noise corresponding to the sensitivity and privacy parameter ϵ, as described above.

Now, after we add noise to both counts (the vote counts of each party), which satisfies differential privacy, we can find the noisy probabilities of a member of that specific strata voting for a candidate. Knowing this distribution allows us to generate “artificial” or synthetic records from the group.

In this small demo sample from our code, we show the generation process for a specific strata: college-educated female voters with ages between 18–25. After we get their vote counts for both candidates, random Laplace noise is added to both values (making the counts differentially private), and we calculate the probabilities associated with both candidates. These probabilities are used when synthesising data of that specific strata, to ensure we get an accurate distribution.

This stratified approach to generating synthetic data carries significant advantages. For example, while we seek to preserve the relationships between attributes, authentically collected data sometimes suffers from class imbalance, where some class values can be over-represented through sampling error. In such cases, synthetic data can be generated from the original data such that all classes and strata are represented equally. The stratified approach also gives users the flexibility to generate data only concerning specific groups (in our example, it could be used to generate data about the voting intentions of only senior citizens). Along with that, the addition of noise ensures that this synthetic data is differentially private, which ensures that any one individual’s sensitive data will not be exposed.

However, there are drawbacks in working with such an approach to generating data. When we work with more complex datasets, which have a similar number of records, but many more attributes with a wide range of values, the number of records in any individual strata will decrease. Conversely, this means that the noise added to our counts will have a disproportionately higher impact, which will result in the generated data being less accurate. This approach also requires us to categorise our data (as we had to do with age, for example), through which we lose some degree of information about our records.

Other approaches to generating differentially private synthetic data involve deep learning, where a model is trained on existing data. While the output of these generative models is not differentially private on its own, algorithms like DP-SGD can be used in the training process to make the synthetic data differentially private [7].

In conclusion, synthetic data generation is an effective way to generate differentially private data, thereby protecting the sources of the data, while preserving the relationships and correlations in the original data from which insights can be drawn.

Sources and References:

- Latyana Sweeney, “Simple demographics often identify people uniquely,”

URL: https://dataprivacylab.org/projects/identifiability/ - Latyana Sweeney, “Weaving technology and policy together to maintain confidentiality,” Journal of Law, Medicine & Ethics, vol. 25, no. 2–3, p.98–110, 1997.

- Arvind Narayanan and Vitaly Shmatikov, “Robust de-anonymization of large sparse datasets,” Proc. IEEE Symp Sec Priv. 111-125. 10.1109/SP.2008.33.

- American National Election Studies:

URL: https://electionstudies.org/data-center/2020-time-series-study/ - National Institute of Standards and Technology, U.S. Department of Commerce, “Guidelines for evaluating differential privacy guarantees,”

DOI: 10.6028/NIST.SP.800-226.ipd - Joseph P. Near and Chiké Abuah, Programming differential privacy,

URL: https://programming-dp.com/ - Martin Abadi, Andy Chu, Ian Goodfellow, Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang, “Deep learning with differential privacy,” Proc. 2016 ACM SIGSAC Conference on Computer and Communications Security, 308-318. 10.1145/2976749.2978318.

Author:

Related Posts

- CDPG

- July 25, 2024

Exploring India’s Wind Patterns using data

India’s energy sector is significantly propelled by its robust indigenous wind power indu ..

- CDPG

- November 25, 2021

Ushering New Era with IoT and 5G

Suresh Kumar, VP – Deployments, IUDX recently participated in a panel discussion on ̶ ..

- CDPG

- May 28, 2024

Sustainability in the Information Society

In 1987, the World Commission on Environment and Development of the United Nations released Our ..